The European Union's Artificial Intelligence (AI) Act represents a pioneering legislative framework designed to regulate AI systems. Initially introduced in 2021, the proposed legislation in its current state contains three key aspects that will carry profound implications for legal technology and the broader legal community[1]. The European Parliament is set to vote on the legislation in mid-March, and if passed, the law would take full effect in 2026—giving AI systems developers and users two years to ensure compliance[2].

1. Categorization of AI According to Risk Levels

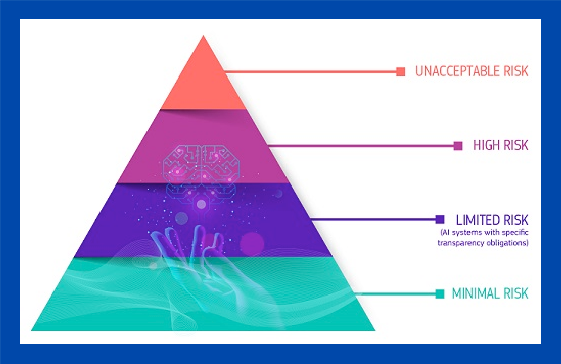

The AI Act introduces a tiered approach to regulate AI systems based on the perceived level of risk they pose:

Unacceptable Risk: The Act prohibits AI systems that are deemed to create an unacceptable risk, such as those involved in social scoring, manipulative AI that impairs informed decision-making, and non-consensual biometric categorization. This category underlines a clear stance against technologies that infringe on individual rights and freedoms.

The Act explicitly bans certain AI practices, including manipulative, exploitative, or deceptive AI that can cause significant harm, emphasizing the EU's commitment to protecting citizens' rights and ethical standards in AI use.

High-Risk AI Systems: A significant portion of the Act focuses on high-risk AI applications, establishing stringent regulatory requirements. These include AI systems critical to safety, public services, and fundamental rights. High-risk classifications also apply to AI systems that profile individuals, indicating the Act's emphasis on privacy and data protection concerns in the use of AI.

Limited Risk AI Systems: For AI applications posing limited risks, such as chatbots and deepfakes, the Act mandates transparency to ensure users are aware they are interacting with an AI system. This provision addresses concerns about deception and transparency in AI interactions.

Minimal Risk: The Act permits an unregulated approach for minimal risk AI applications. These encompass the majority of AI technologies currently on the market, like AI-enabled video games and spam filters. However, the evolving landscape of generative AI suggests a potential reevaluation of what constitutes minimal risk.

2. Distinction Between Providers and Users and Their Obligations

The AI Act delineates responsibilities primarily on the providers (developers) of high-risk AI systems, including those based outside the EU if their systems are used within the Union. These obligations encompass risk management, data governance, technical documentation, record-keeping, and ensuring systems are designed for human oversight, accuracy, robustness, and cybersecurity.

Users (deployers) of high-risk AI systems, whether within the EU or in third countries (if the AI system’s output is used within the EU), also bear certain responsibilities, albeit fewer than providers. This distinction underscores the Act's approach to maintaining a sense of responsibility and appropriate use when it comes to the development and application of AI systems.

3. General Purpose AI (GPAI) Regulation

The Act introduces specific provisions for General Purpose AI models, recognizing their potential for widespread impact across various applications. All GPAI model providers are required to maintain comprehensive technical documentation, respect copyright laws, and publish summaries of their training data. Those posing systemic risks must additionally perform evaluations, adversarial testing, and ensure cybersecurity, highlighting the Act's nuanced approach to balancing innovation with risk management.

Implications for the Legal Community and Legal Technology

For legal professionals, the AI Act is the first in a new era of legal scrutiny and responsibility in the development and deployment of AI technologies. Lawyers whose clients reside or do business in the EU will eventually need to navigate a complex regulatory landscape, ensuring compliance while leveraging AI's benefits in legal practice, from document analysis to predictive analytics. Legal professionals will play a crucial role in interpreting the Act, advising on compliance strategies, and representing clients in related disputes.

Overall, the EU AI Act sets a comprehensive regulatory framework that will significantly impact the legal technology sector and the broader legal community. By establishing clear rules and responsibilities, the Act aims to foster innovation in AI while safeguarding fundamental rights and promoting trust in AI technologies.

[1] The AI Act Explorer | EU Artificial Intelligence Act

[2] EU Poised to Enact Sweeping AI Rules With US, Global Impact (1) (bloomberglaw.com)